Since this problem is about putting an integer somewhere for another thread to retrieve, threads that produce the integers are producers and threads that retrieve the integers are consumer. Therefore, some of you may immediately recognize that this is simply an instance of the Producer/Consumer problem! In our case, we need a buffer Buf_A for a thread in group A to deposit its message so that a thread in group B can retrieve. Similarly, we also need a buffer Buf_B for a thread in group B to deposit a message so that a thread in group A can retrieve. How many slots are there in each buffer? We claim that one slot is appropriate. Here is why. Suppose there are more than one slots in a buffer, say buffer Buf_A. Suppose further threads A1 and A2, in this order, deposit their messages into buffer Buf_A. If threads B1 and B2 retrieve A1's and A2's messages correctly, due to the dynamic behavior of threads, we are not sure if B1 and B2 will deposit their messages into Buf_B in the same order. Even through they deposit messages in the same order, we are not sure if threads A1 and A2 will retrieve the messages in the expected order. Therefore, the number of slots in each buffer must be one to remove this uncertainty.

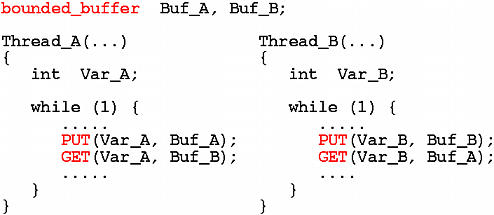

The following is an attempt based on this idea. We use bounded_buffer as a data type and declare two buffers Buf_A and Buf_B of one slot. We also use PUT(data, buffer) to indicate depositing data into buffer and GET(data, buffer) to indicate retrieving a value from buffer and storing it into variable data. Therefore, each thread simply deposits its integer message into its "output" buffer and retrieve a message from its "input" buffer. The mutual exclusion is guaranteed by the bounded buffer algorithm. This makes our program very clean.

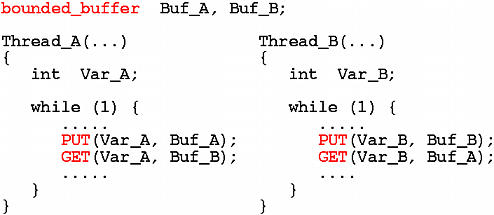

Does it work? No, this simple-minded version does not work. The following execution sequence shows the reason. Thread A1 and thread B deposit their messages into buffer Buf_A and buffer Buf_B, respectively. Then, thread B retrieves A1's message, which make buffer Buf_A empty. As a result, thread A2 can deposit its message into buffer Buf_A. At this point, if thread A1 runs and retrieves the message in buffer Buf_B, we have no problem. However, the thread scheduler may allow thread A2 to continue, and, consequently, A2 retrieves the message in buffer Buf_B which is supposed to be retrieved by thread A1. Hence, we have a race condition.

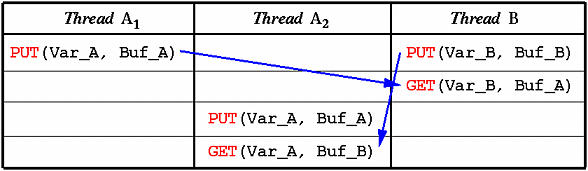

The race condition mentioned above is due to the fact that message exchange is interrupted in the middle. So, we might consider encapsulating the PUT()/GET() sequence into a critical section as follows. A binary semaphore (or a mutex lock) is used to protect both message exchange sequences.

Is this correct? No, it actually causes a deadlock immediately after this program runs. Suppose thread A runs first and passes Wait(Mutex). Then, it deposits its message into buffer Buf_A. However, since A is the only thread that is in the critical section protected by semaphore Mutex, no thread in group B can be in the critical section, and, as a result, buffer Buf_B is empty. Hence, when thread A reaches the GET(), it blocks before it can release semaphore Mutex. This causes a circular waiting: A owns the lock and is waiting for buffer Buf_B to be filled by B, while B holds the data to be put into buffer Buf_B and is waiting for thread A to release semaphore Mutex. In fact, this is the reason that the third attempt uses two semaphores!

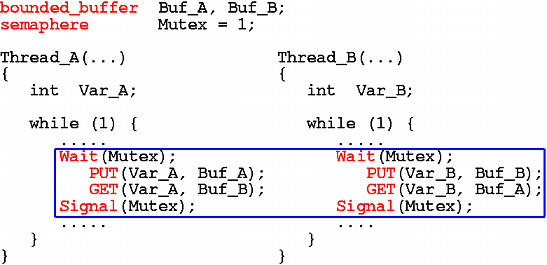

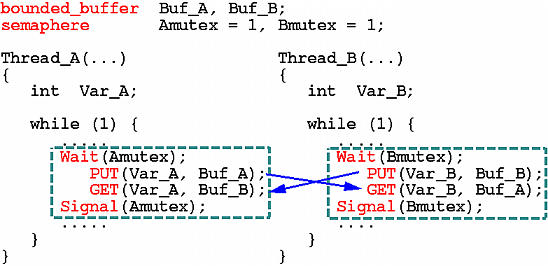

Because using only one lock can cause deadlock, we might consider the use of two mutex locks, one for threads in group A and the other for threads in group B. The following shows this new attempt:

Is this correct? Yes, it is. Semaphore Amutex (resp., Bmutex) guarantees that no more than one thread in group A (resp., group B) can be in the message exchange section. To establish the correctness of this solution, we need to show that (1) once two threads enter their critical sections, they exchange messages without the interference from any other threads, and (2) after one thread exits its critical section, no thread in the same group can rush in and ruin the existing message.

Let us see why (1) holds. Once threads A and B executes Signal(Amutex) and Signal(Bmutex), respectively, their message exchange activities complete because they both deposited their message with the call to PUT() and retrieved the other party's message with the call to GET(). Thus, (1) holds.

Now consider case (2). Suppose thread A exits its critical section while B is still in its critical section. Then, we know that B must have completed its PUT() call, because A must retrieve B's message before it can execute its GET(). If B has passed its GET() call, its message exchange has completed. Thus, the only place that concerns us is when B is between PUT() and GET(). If this is the case, the message deposited by A is still in buffer Buf_A, which means buffer Buf_A is still full. Therefore, even though a new A can enter its critical section, it is blocked by the call to PUT() until B successfully executes its GET() that empties buffer Buf_A. Consequently, the newcomer in group A cannot prohibit B from completing its message exchange activity, and (2) hold. In summary, the above solution is correct!

Lesson Learned

|

|

|

|