Classification and Regression Trees and Random Forests classifiers

Classification Trees and Random Forests

The classic statistical decision theory on which LDA and QDA and logistic regression are highly model-based. We assume the features are fit by some model, we fit that model, and use inferences from that model to make a decision. Using the model means we make assumptions, and if those assumptions are correct, we can have a lot of success. Not all classifiers make such strong assumptions, and three of these will be covered in this section: Classification trees and random forests. We will also discuss a variant of these that permits regression-like models–regression trees.

Classification trees

Classification or decision trees assume that the different predictors are independent and combine together to form a an overall likelihood of one class over another. However, this may not be true. Many times, we might want to make a classification rule based on a few simple measures. The notion is that you may have several measures, and by asking a few decisions about individual dimensions, end up with a classification decision. For example, such trees are frequently used in medical contexts. If you want to know if you are at risk for some disease, the decision process might be a series of yes/no questions, at the end of which a ‘high-risk’ or ‘low-risk’ label would be given:

- Decision 1: Are you above 35?

- Yes: Use Decision 2

- No: Use Decision 3

- Decision 2: Do you have testing scores above 1000?

- Yes: High risk

- No: Low risk

- Decision 3: Do you a family history?

- Yes: etc.

- No: etc.

Notice that if we were trying to determine a class via LDA, we’d create a single composite score based on all the questions and make a decision at the end. But if we make a decision about individual variables consecutively, it allows us to incorporate interactions between variables, which can be very powerful. Tree-based decision tools can be useful in many cases:

- When we want simple decision rules that can be applied by novices or people under time stress.

- When the structure of our classes are dependent or nested, or somehow hierarchical. Many natural categories have a hierarchical structure, so that the way you split one variable may depend on the value of another. For example, if you want to know if someone is ‘tall’, you first might determine their gender, and then use a different cutoff for each gender.

- When many of your observables are binary states. To classify lawmakers we can look at their voting patterns–which are always binary. We may be able to identify just a couple issues we care about that will tell us everything we need to know.

- When you have multiple categories. There is no need to restrict the classes to binary, unlike for the previous methods we examined.

- When you expect some classifications will require complex interactions between feature values.

To make a classification tree, we essentially have to use heuristic processes to determine the best order and cutoffs to use in making decisions, while identifying our error rates. Even with a single feature, we can make a tree that correctly classifies all the elements in a set (as long as all feature values are unique). So we also need to understand how many branches/rules to use, in order to minimize over-fitting.

There are a number of software packages available for classification

trees. One commonly-used package in R is called rpart. In

fact, rpart implements a more general concept called ‘Classification and

Regression Trees’ (CART). So, instead of giving a categorical output to

rpart, you can give it a continuous output and specify “ANOVA”, and each

bottom leaf computes the average value of the items, producing a sort of

tree-based regression/ANOVA model.

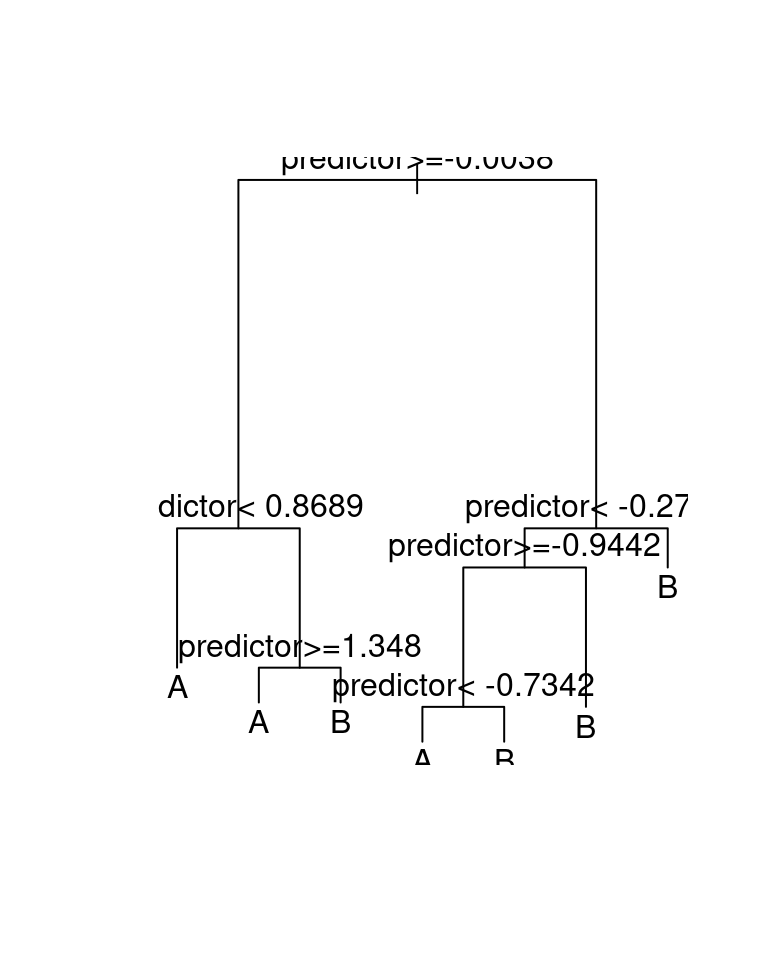

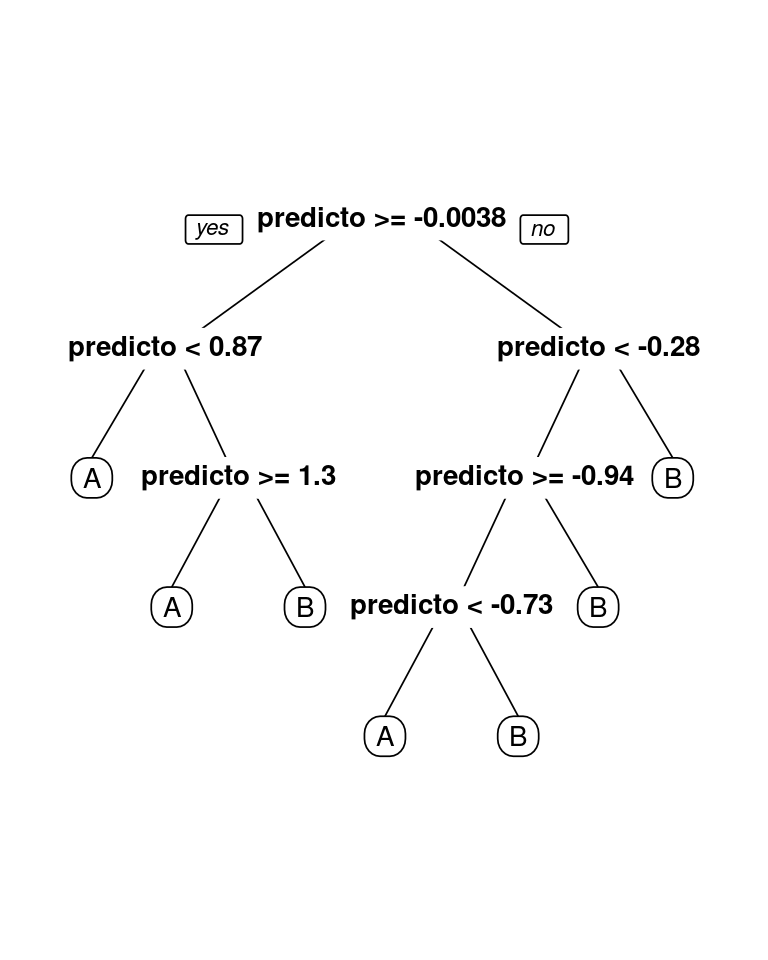

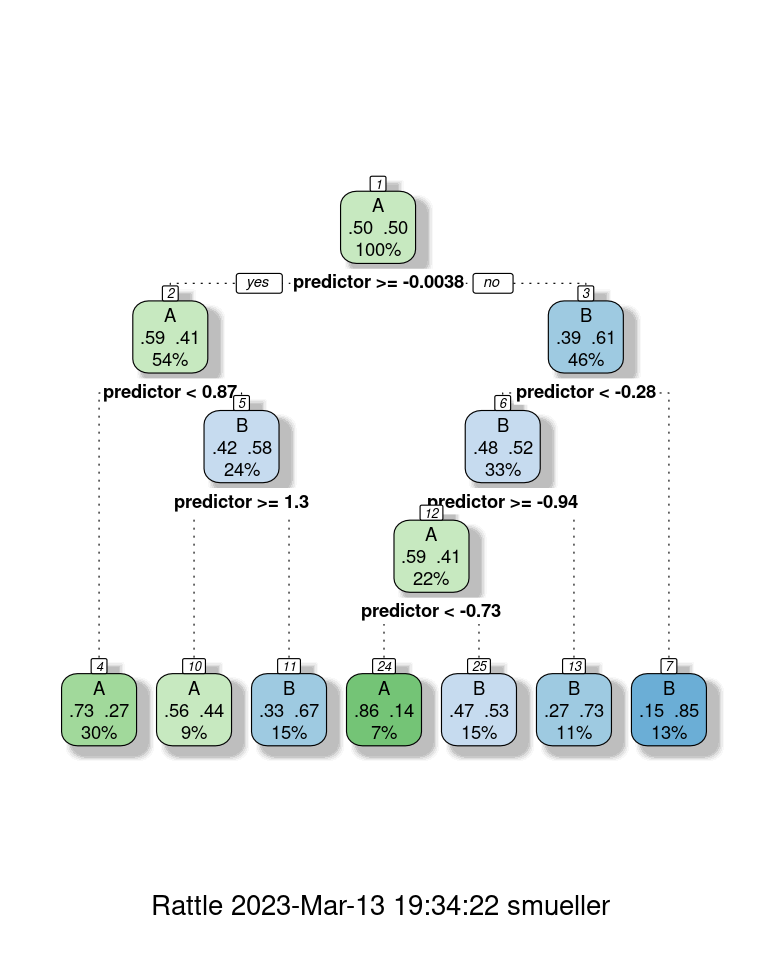

Let’s start with a simple tree made to classify elements on which we have only one measure:

library(rpart)

library(rattle) #for graphing

library(rpart.plot) #also for graphing

library(DAAG)

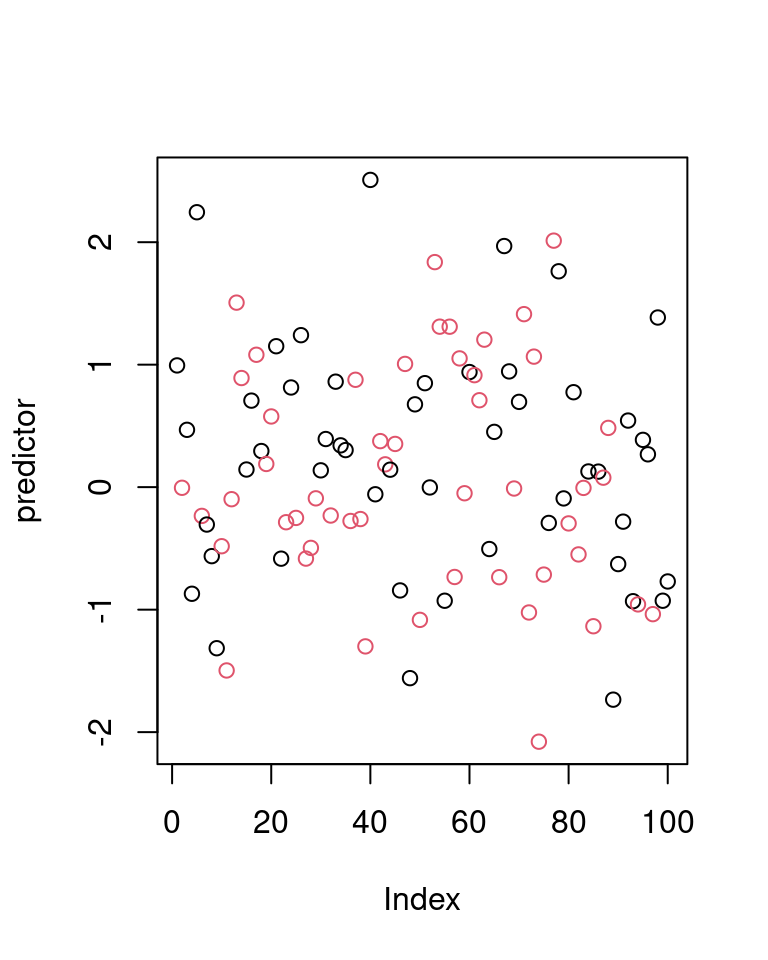

classes <- sample(c("A", "B"), 100, replace = T)

predictor <- rnorm(100)

r1 <- rpart(classes ~ predictor, method = "class")

plot(r1)

text(r1)

Overall accuracy = 0.7

Confusion matrix

Predicted (cv)

Actual [,1] [,2]

[1,] 0.66 0.34

[2,] 0.26 0.74

Notice that even though the predictor had no true relationship to the

categories, we were able to get 71% accuracy. We could in fact do

better, just by adding rules. Various aspects of the tree algorithm are

controlled via the control argument, using rpart.control.

Here, we set minsplit to 1, which says we can split a group

as long as it has at least 1 item. minbucket specifies the

smallest number of observations that can appear on the bottom node, and

cp is a complexity argument. It defaults to .01, and is a criterion for

how much better each model must be before splitting a leaf node. A

negative value will mean that any additional node is better, and so it

will accept any split.

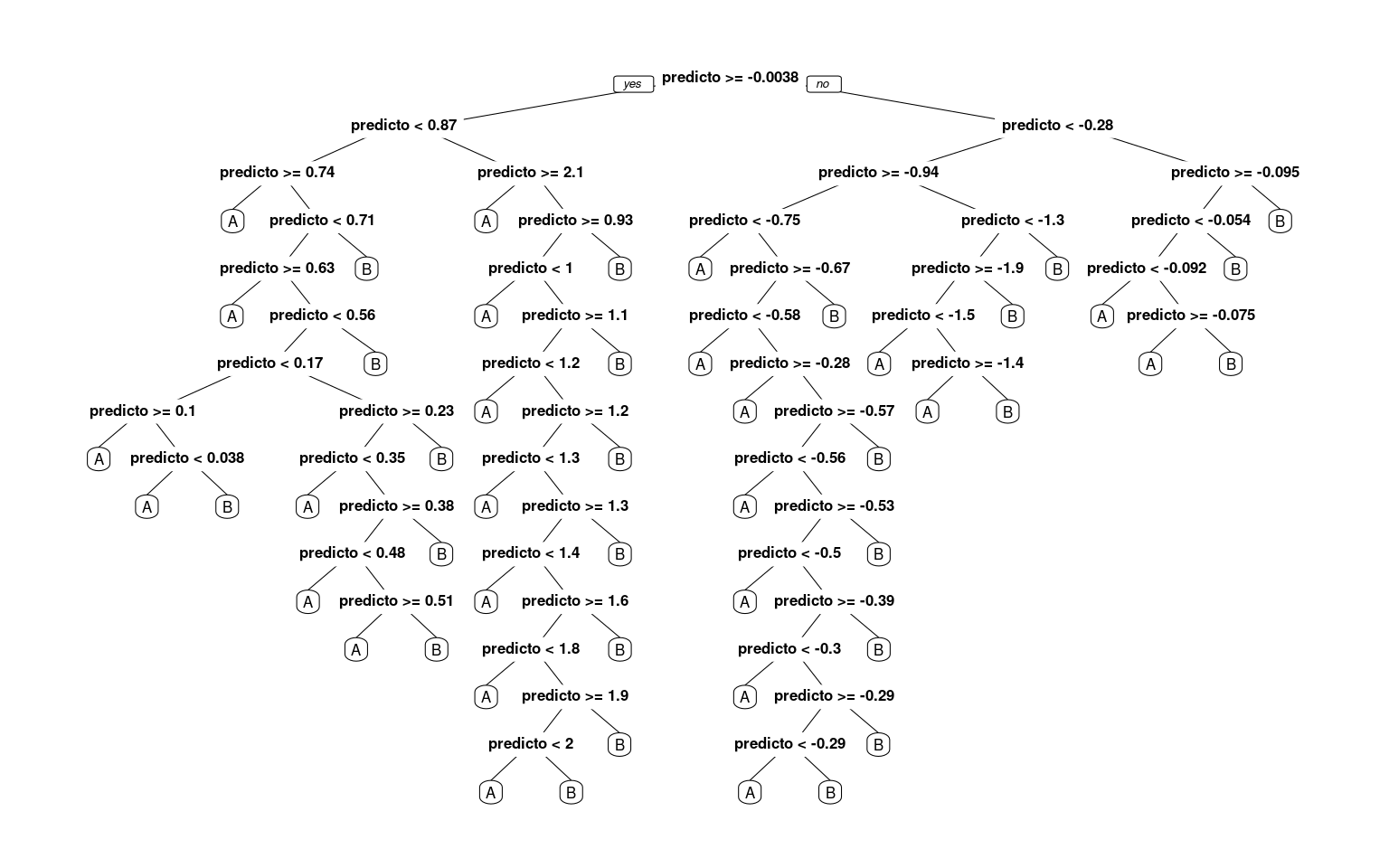

r2 <- rpart(classes ~ predictor, method = "class", control = rpart.control(minsplit = 1,

minbucket = 1, cp = -1))

prp(r2)

# this tree is a bit too large for this graphics method: fancyRpartPlot(r2)

confusion(classes, predict(r2, type = "class"))Overall accuracy = 1

Confusion matrix

Predicted (cv)

Actual [,1] [,2]

[1,] 1 0

[2,] 0 1Now, we have completely predicted every item by successively dividing the line. Notice that by default, rpart must choose the best number of nodes to use,, and the default control parameters are set to often be reasonable, but there is no guarantee they are the right ones for your data. This is a critical decision for a decision tree, as it impacts the complexity of the model, and how much it overfits the data.

How will this work for a real data set? Let’s re-load the engineering data set. In this data, we have asked both Engineering and Psychology students to determine whether pairs of words from Psychology, Engineering go together, and measured their time and accuracy.

joint <- read.csv("eng-joint.csv")

joint$eng <- as.factor(c("psych", "eng")[joint$eng + 1])

## This is the partitioning tree:

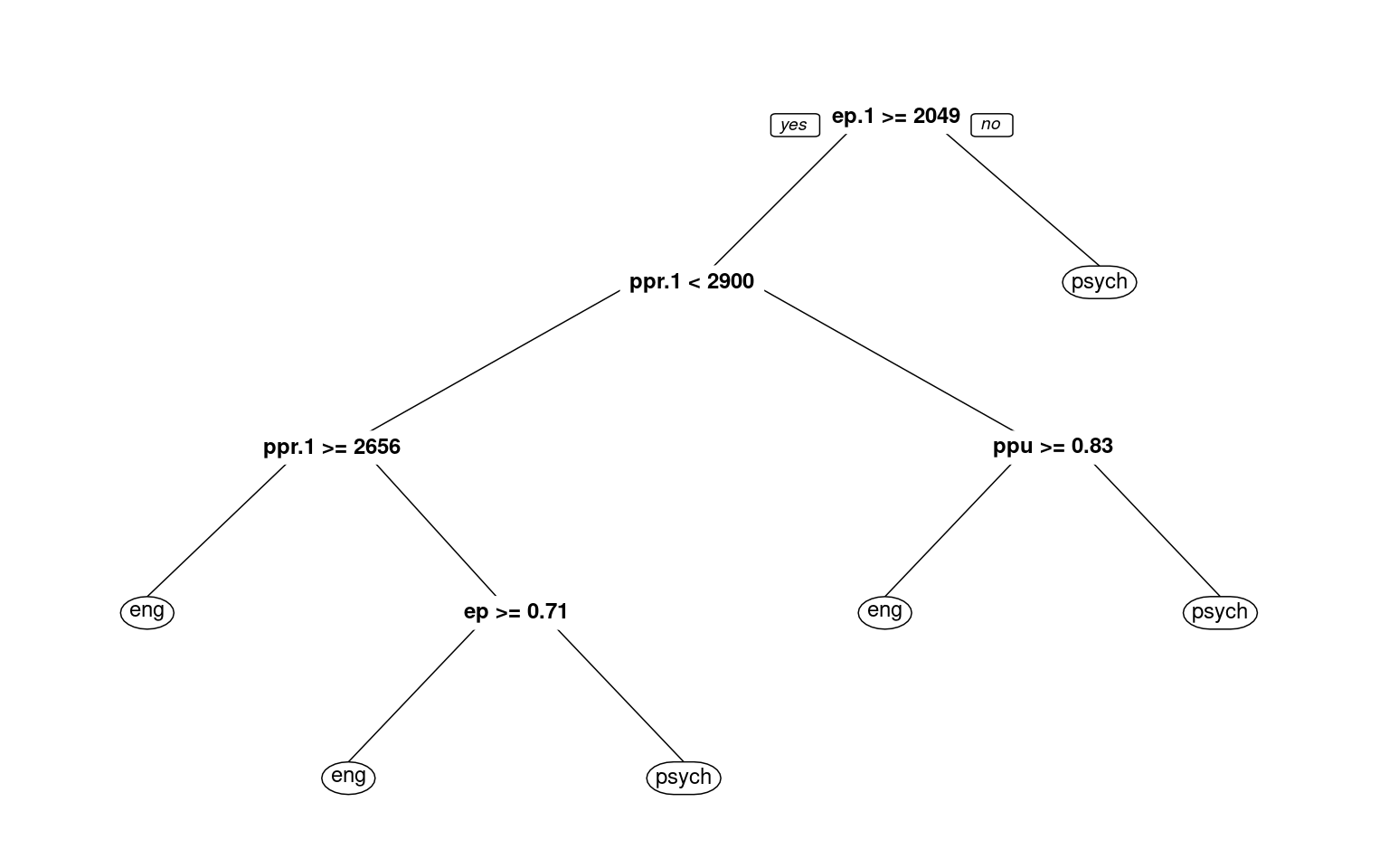

r1 <- rpart(eng ~ ., data = joint, method = "class")

r1n= 76

node), split, n, loss, yval, (yprob)

* denotes terminal node

1) root 76 38 eng (0.50000000 0.50000000)

2) ep.1>=2049.256 68 31 eng (0.54411765 0.45588235)

4) ppr.1< 2900.149 39 14 eng (0.64102564 0.35897436)

8) ppr.1>=2656.496 11 1 eng (0.90909091 0.09090909) *

9) ppr.1< 2656.496 28 13 eng (0.53571429 0.46428571)

18) ep>=0.7083333 14 4 eng (0.71428571 0.28571429) *

19) ep< 0.7083333 14 5 psych (0.35714286 0.64285714) *

5) ppr.1>=2900.149 29 12 psych (0.41379310 0.58620690)

10) ppu>=0.8333333 7 2 eng (0.71428571 0.28571429) *

11) ppu< 0.8333333 22 7 psych (0.31818182 0.68181818) *

3) ep.1< 2049.256 8 1 psych (0.12500000 0.87500000) *

Overall accuracy = 0.737

Confusion matrix

Predicted (cv)

Actual eng psych

eng 0.658 0.342

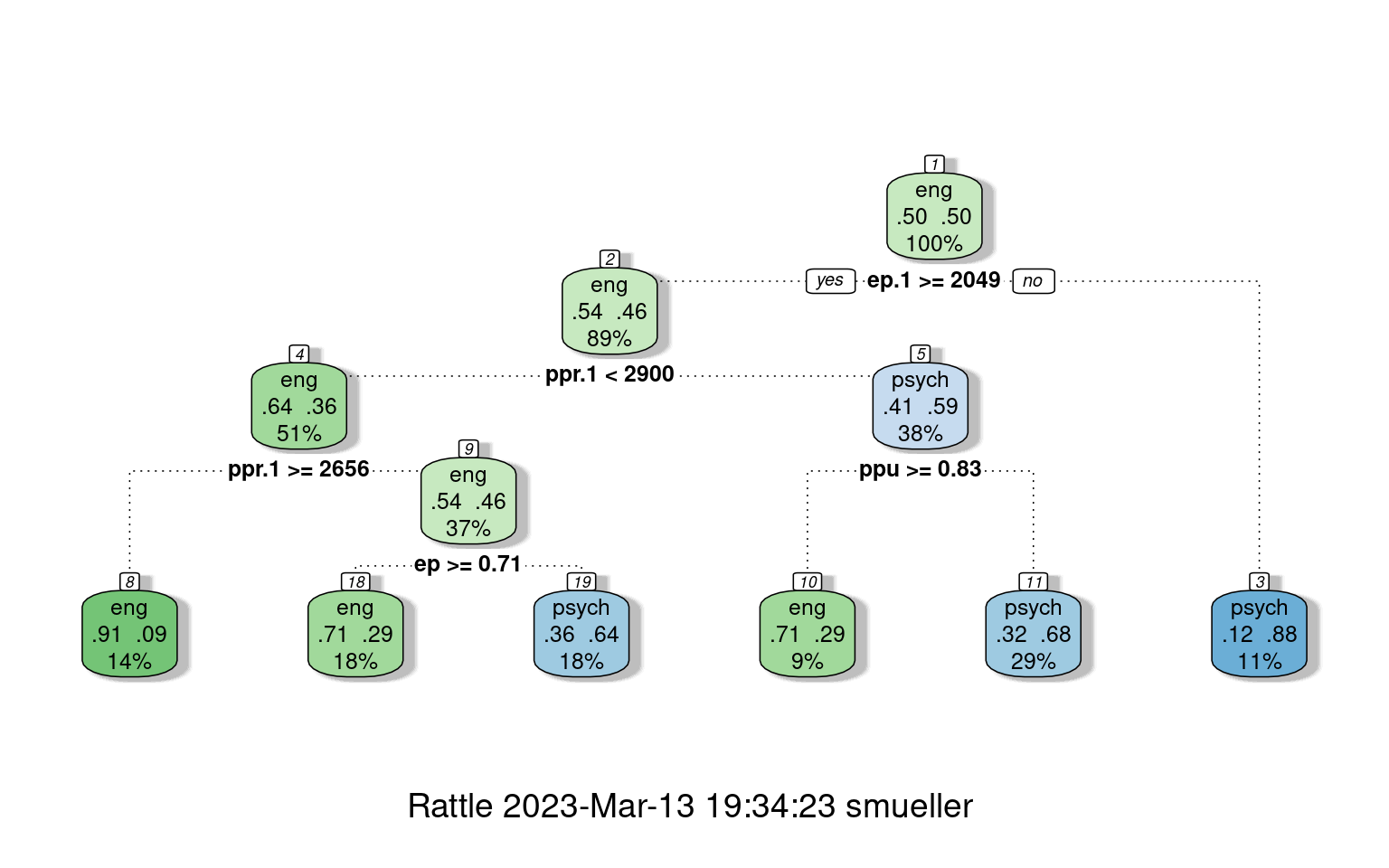

psych 0.184 0.816With the default full partitioning, we get 73% accuracy. But the decision tree is fairly complicated. For example, notice that nodes 2 and 4 consecutively select ppr.1 twice. First, if ppr.1 is faster than 2900, it then checks if it is slower than 2656, and makes different decisions based on these narrow ranges of response time. This seems unlikely to be a real or meaningful decision, and we might want something simpler. Let’s only allow it to go to a depth of 2, by controlling maxdepth:

library(rattle)

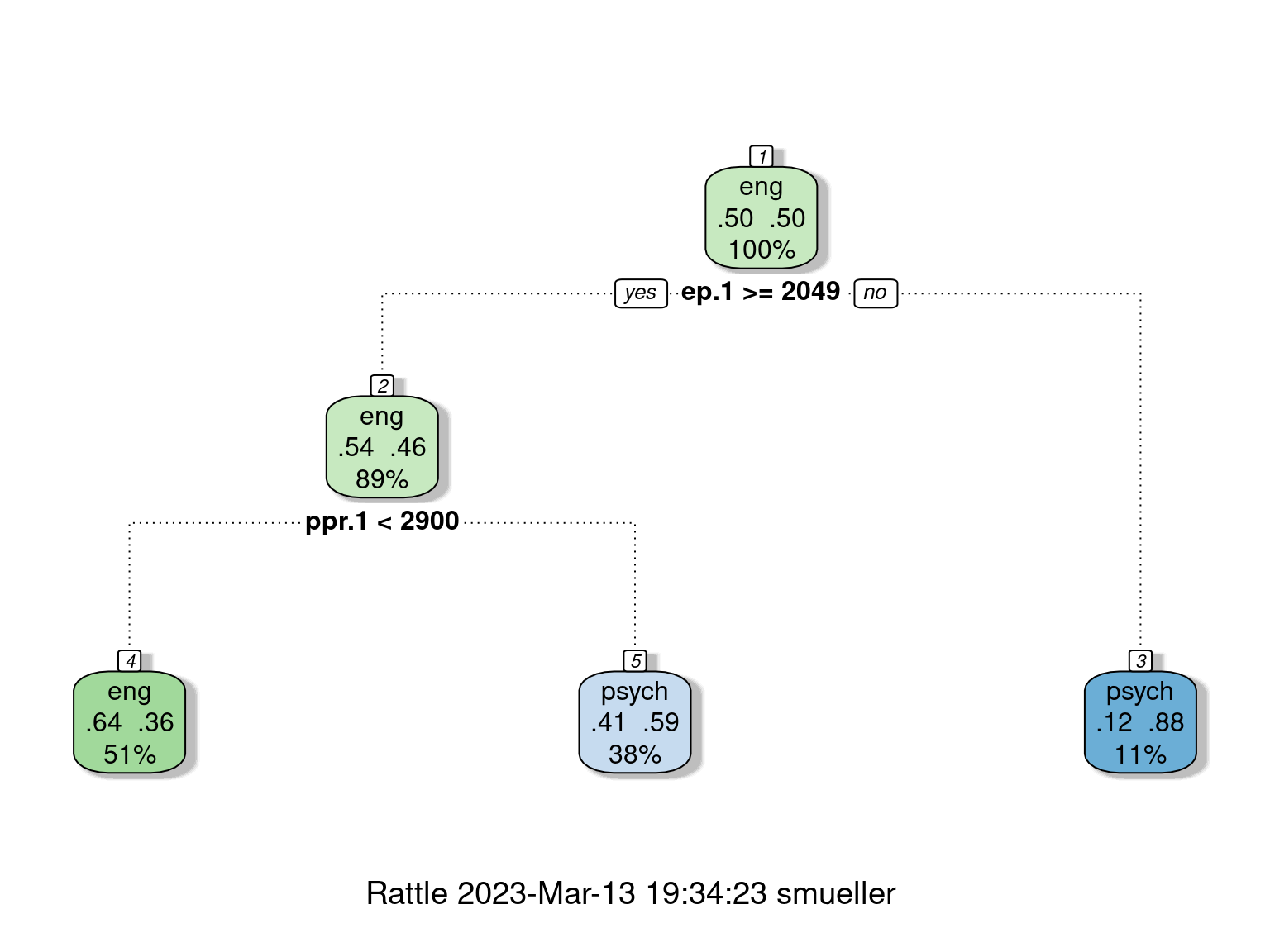

r2 <- rpart(eng ~ ., data = joint, method = "class", control = rpart.control(maxdepth = 2))

r2n= 76

node), split, n, loss, yval, (yprob)

* denotes terminal node

1) root 76 38 eng (0.5000000 0.5000000)

2) ep.1>=2049.256 68 31 eng (0.5441176 0.4558824)

4) ppr.1< 2900.149 39 14 eng (0.6410256 0.3589744) *

5) ppr.1>=2900.149 29 12 psych (0.4137931 0.5862069) *

3) ep.1< 2049.256 8 1 psych (0.1250000 0.8750000) *

Overall accuracy = 0.645

Confusion matrix

Predicted (cv)

Actual eng psych

eng 0.658 0.342

psych 0.368 0.632Here, we are down to 65% ‘correct’ classifications.

Looking deeper

If we look at the summary of the tree object, it gives us a lot of details about goodness of fit and decision points.

Call:

rpart(formula = eng ~ ., data = joint, method = "class", control = rpart.control(maxdepth = 2))

n= 76

CP nsplit rel error xerror xstd

1 0.1578947 0 1.0000000 1.157895 0.1132690

2 0.1315789 1 0.8421053 1.289474 0.1097968

3 0.0100000 2 0.7105263 1.184211 0.1127448

Variable importance

ep.1 ppr.1 ppu.1 eer.1 eeu.1 ep

30 25 16 15 12 1

Node number 1: 76 observations, complexity param=0.1578947

predicted class=eng expected loss=0.5 P(node) =1

class counts: 38 38

probabilities: 0.500 0.500

left son=2 (68 obs) right son=3 (8 obs)

Primary splits:

ep.1 < 2049.256 to the right, improve=2.514706, (0 missing)

eeu.1 < 2431.336 to the right, improve=2.326531, (0 missing)

ppu.1 < 4579.384 to the right, improve=2.072727, (0 missing)

eer.1 < 4687.518 to the right, improve=1.966874, (0 missing)

ppu < 0.8333333 to the right, improve=1.719298, (0 missing)

Surrogate splits:

ppu.1 < 1637.18 to the right, agree=0.947, adj=0.500, (0 split)

eeu.1 < 1747.891 to the right, agree=0.934, adj=0.375, (0 split)

eer.1 < 1450.41 to the right, agree=0.921, adj=0.250, (0 split)

ppr.1 < 1867.892 to the right, agree=0.921, adj=0.250, (0 split)

Node number 2: 68 observations, complexity param=0.1315789

predicted class=eng expected loss=0.4558824 P(node) =0.8947368

class counts: 37 31

probabilities: 0.544 0.456

left son=4 (39 obs) right son=5 (29 obs)

Primary splits:

ppr.1 < 2900.149 to the left, improve=1.717611, (0 missing)

ppu < 0.8333333 to the right, improve=1.553072, (0 missing)

ppu.1 < 4579.384 to the right, improve=1.535294, (0 missing)

eer.1 < 4687.518 to the right, improve=1.529205, (0 missing)

ep.1 < 2754.762 to the left, improve=1.013682, (0 missing)

Surrogate splits:

eer.1 < 3007.036 to the left, agree=0.750, adj=0.414, (0 split)

ep.1 < 2684.733 to the left, agree=0.647, adj=0.172, (0 split)

ppu.1 < 2346.981 to the left, agree=0.632, adj=0.138, (0 split)

eeu.1 < 2806.34 to the left, agree=0.618, adj=0.103, (0 split)

ep < 0.7916667 to the left, agree=0.603, adj=0.069, (0 split)

Node number 3: 8 observations

predicted class=psych expected loss=0.125 P(node) =0.1052632

class counts: 1 7

probabilities: 0.125 0.875

Node number 4: 39 observations

predicted class=eng expected loss=0.3589744 P(node) =0.5131579

class counts: 25 14

probabilities: 0.641 0.359

Node number 5: 29 observations

predicted class=psych expected loss=0.4137931 P(node) =0.3815789

class counts: 12 17

probabilities: 0.414 0.586 This model seems to fit a lot better than our earlier LDA models, which suggest that it is probably overfitting. Cross-validation can be done within the control parameter \(xval\):

n= 76

node), split, n, loss, yval, (yprob)

* denotes terminal node

1) root 76 38 eng (0.5000000 0.5000000)

2) ep.1>=2049.256 68 31 eng (0.5441176 0.4558824)

4) ppr.1< 2900.149 39 14 eng (0.6410256 0.3589744) *

5) ppr.1>=2900.149 29 12 psych (0.4137931 0.5862069)

10) ppu>=0.8333333 7 2 eng (0.7142857 0.2857143) *

11) ppu< 0.8333333 22 7 psych (0.3181818 0.6818182) *

3) ep.1< 2049.256 8 1 psych (0.1250000 0.8750000) *Overall accuracy = 0.737

Confusion matrix

Predicted (cv)

Actual eng psych

eng 0.658 0.342

psych 0.184 0.816Overall accuracy = 0.684

Confusion matrix

Predicted (cv)

Actual eng psych

eng 0.789 0.211

psych 0.421 0.579Accuracy goes down a bit, but the 74% accuracy is about what we achieved in the simple lda models.

Clearly, for partitioning trees, we have to be careful about overfitting, because we can always easily get the perfect classification.

Regression Trees

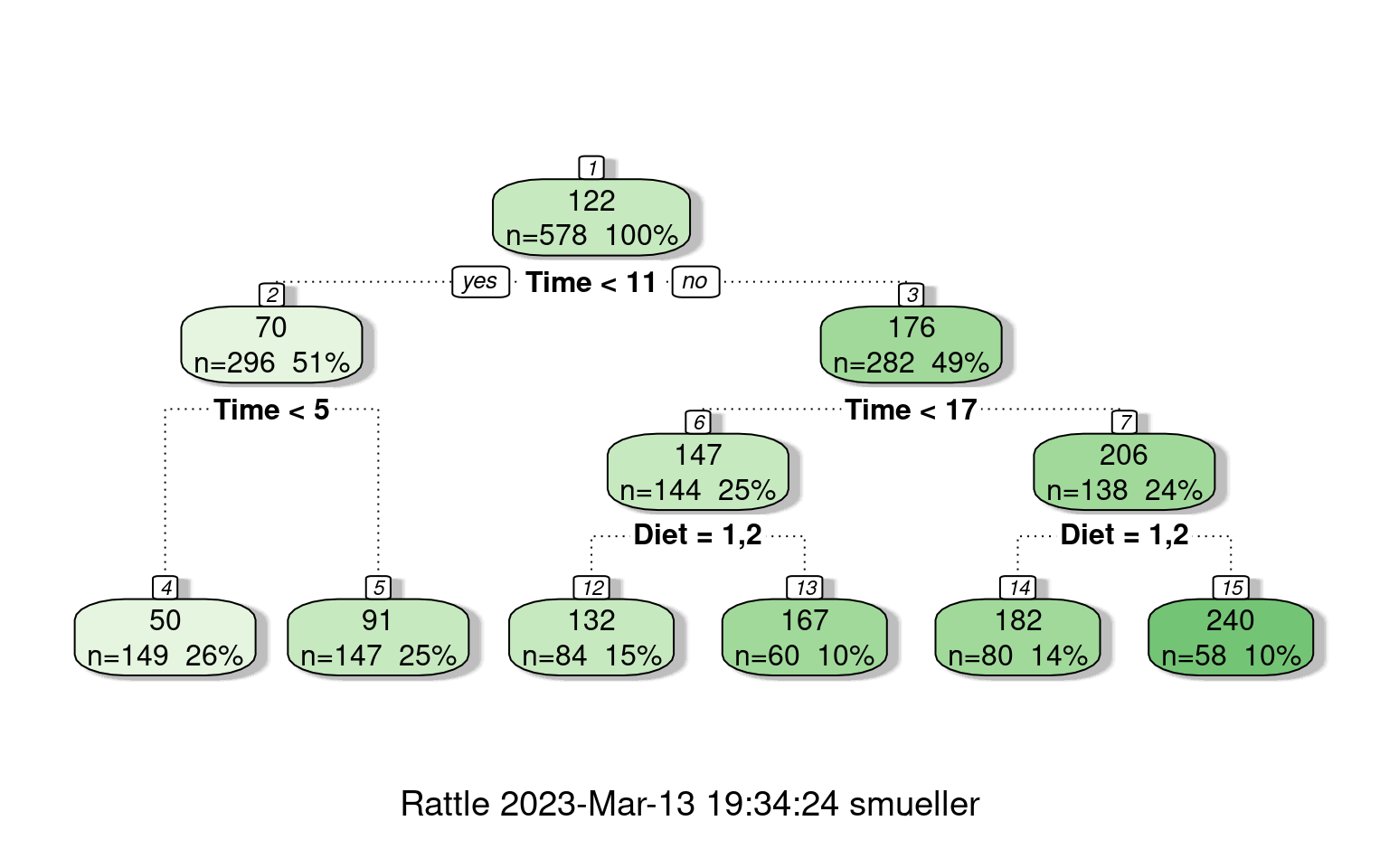

Instead of using a tree to divide and predict group memberships, rpart can also use a tree as a sort of regression. It tries to model all of the data within a group as a single intercept value, and then tries to divide groups to improve fit. There are some pdf help files available for more detail, but the regression options (including poisson and anova) are a bit poorly documented. But, basically, we can use the same ideas to partition the data, and then fit either a single value within each group or some small linear model. The tree shows nodes with the the top value the mean for that branch.

cw <- rpart(weight ~ Time + Diet, data = ChickWeight, control = rpart.control(maxdepth = 5))

summary(cw)Call:

rpart(formula = weight ~ Time + Diet, data = ChickWeight, control = rpart.control(maxdepth = 5))

n= 578

CP nsplit rel error xerror xstd

1 0.54964983 0 1.0000000 1.0031793 0.06390605

2 0.08477800 1 0.4503502 0.4598329 0.03642709

3 0.04291979 2 0.3655722 0.3695321 0.02882118

4 0.03905827 3 0.3226524 0.3426987 0.03004974

5 0.01431308 4 0.2835941 0.3126594 0.02790160

6 0.01000000 5 0.2692810 0.3127935 0.02767334

Variable importance

Time Diet

93 7

Node number 1: 578 observations, complexity param=0.5496498

mean=121.8183, MSE=5042.484

left son=2 (296 obs) right son=3 (282 obs)

Primary splits:

Time < 11 to the left, improve=0.54964980, (0 missing)

Diet splits as LRRR, improve=0.04479917, (0 missing)

Node number 2: 296 observations, complexity param=0.04291979

mean=70.43243, MSE=700.6779

left son=4 (149 obs) right son=5 (147 obs)

Primary splits:

Time < 5 to the left, improve=0.60314240, (0 missing)

Diet splits as LRRR, improve=0.04085943, (0 missing)

Node number 3: 282 observations, complexity param=0.084778

mean=175.7553, MSE=3919.043

left son=6 (144 obs) right son=7 (138 obs)

Primary splits:

Time < 17 to the left, improve=0.2235767, (0 missing)

Diet splits as LLRR, improve=0.1333256, (0 missing)

Node number 4: 149 observations

mean=50.01342, MSE=71.0468

Node number 5: 147 observations

mean=91.12925, MSE=487.9085

Node number 6: 144 observations, complexity param=0.01431308

mean=146.7778, MSE=1825.326

left son=12 (84 obs) right son=13 (60 obs)

Primary splits:

Diet splits as LLRR, improve=0.1587094, (0 missing)

Time < 15 to the left, improve=0.1205157, (0 missing)

Node number 7: 138 observations, complexity param=0.03905827

mean=205.9928, MSE=4313.283

left son=14 (80 obs) right son=15 (58 obs)

Primary splits:

Diet splits as LLRR, improve=0.19124870, (0 missing)

Time < 19 to the left, improve=0.02989732, (0 missing)

Node number 12: 84 observations

mean=132.3929, MSE=1862.834

Node number 13: 60 observations

mean=166.9167, MSE=1077.543

Node number 14: 80 observations

mean=181.5375, MSE=3790.849

Node number 15: 58 observations

mean=239.7241, MSE=3071.165

var n wt dev yval complexity ncompete nsurrogate

1 Time 578 578 2914555.93 121.81834 0.549649827 1 0

2 Time 296 296 207400.65 70.43243 0.042919791 1 0

4 <leaf> 149 149 10585.97 50.01342 0.010000000 0 0

5 <leaf> 147 147 71722.54 91.12925 0.007137211 0 0

3 Time 282 282 1105170.12 175.75532 0.084778005 1 0

6 Diet 144 144 262846.89 146.77778 0.014313079 1 0

12 <leaf> 84 84 156478.04 132.39286 0.005288100 0 0

13 <leaf> 60 60 64652.58 166.91667 0.005342978 0 0

7 Diet 138 138 595232.99 205.99275 0.039058272 1 0

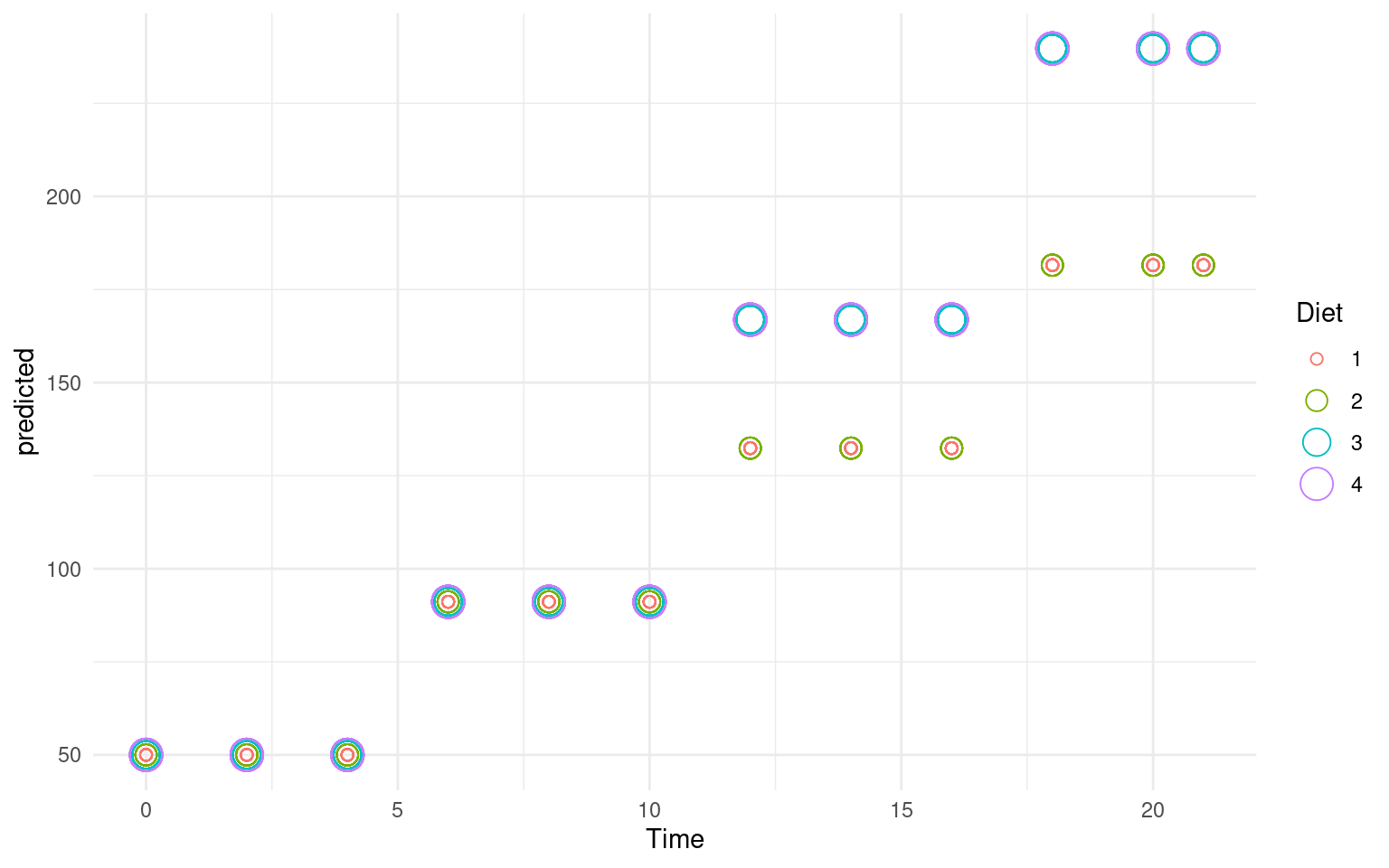

[ reached 'max' / getOption("max.print") -- omitted 2 rows ]library(ggplot2)

ChickWeight$predicted <- predict(cw)

ggplot(ChickWeight, aes(x = Time, y = predicted, group = Diet, color = Diet, size = Diet)) +

geom_point(shape = 1) + theme_minimal()

Random Forests

One advantage of partitioning/decision trees is that they are fast and easy to make. They are also considered interpretable and easy to understand. A tree like this can be used by a doctor or a medical counselor to help understand the risk for a disease, by asking a few simple questions.

The downsides of a decision tree is that they are often not very good, especially once they have been trimmed to avoid over-fitting. Recently, researchers have been interested in combining the results of many small (and often not-very-good) classifiers to make one better one. This often is described as ‘boosting’, or `ensemble’ methods, and there are a number of ways to achieve this. Doing this in a particular way with decision trees is referred to as a ‘random forest’ (see Breiman and Cutler).

Random forests can be used for both regression and classification (trees can be used in either way as well), and the classification and regression trees (CART) approach is a method that supports both. A random forest works as follows:

- Build \(N\) trees (where N may be hundreds; Brieman says ‘Don’t be stingy’), where each tree is built from a random subset of features/variables. That is, on each step, choose the best variable to divide the branch based on a random subset of variables.

For each tree: 1. Pick a random sample of data. This is by default ‘bootstrapped’, meaning it has the same size as your data but samples it with replacement. 2. Pick K, where k is the number of variables considered at each step (typically sqrt(number of variables)) 3. Select k variables at random. 4. Find the best split among those k variables. 5. Repeat from point 3 until you reach the end criteria (determined by maxnodes and nodesize)

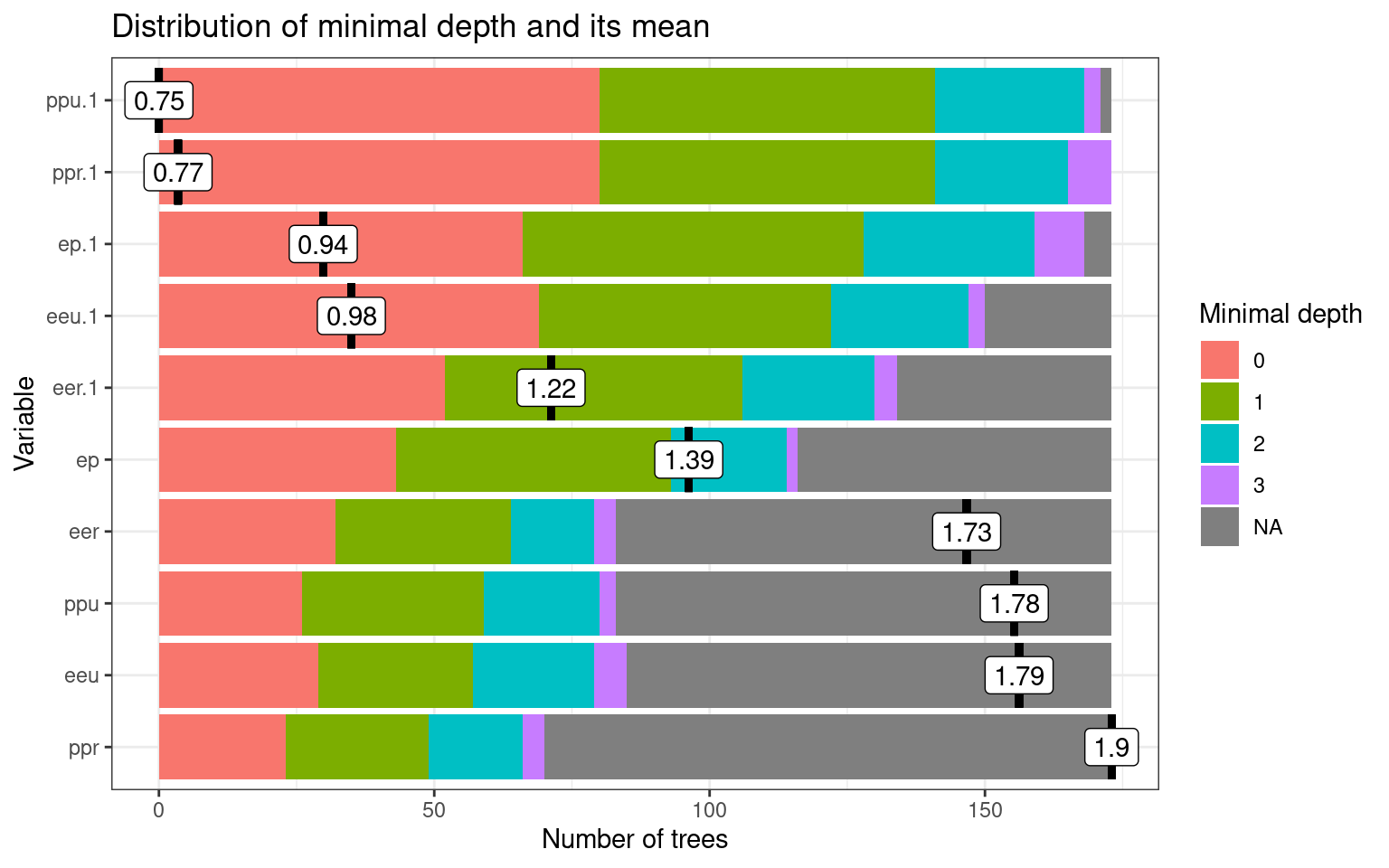

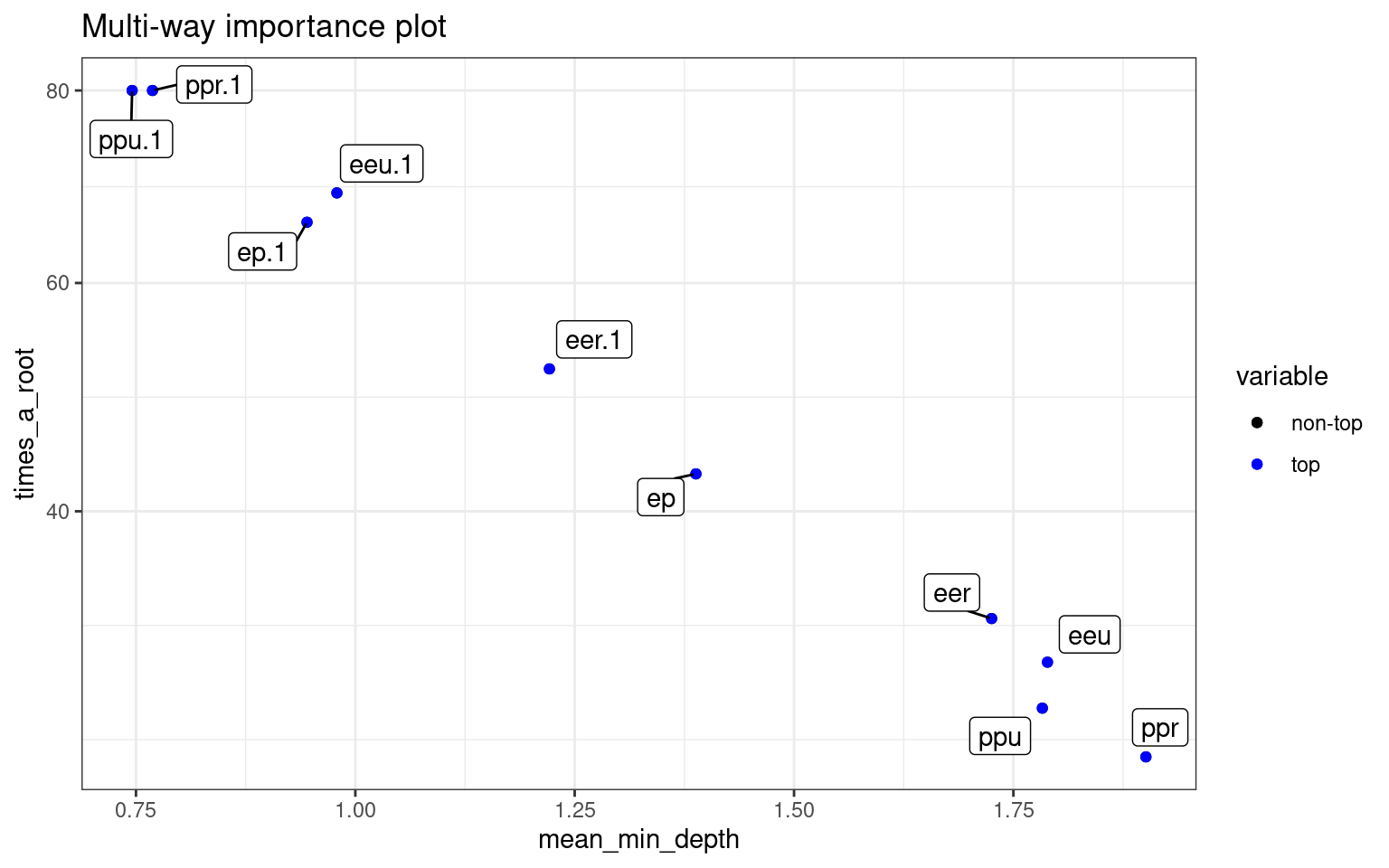

- Then, to classify your data, have each tree determine its best guess, and then take the most frequent outcome (or give a probabilistic answer based on the balance of evidence). This can both provide a robust classifier and a general importance of variables. You can look at the trees that more more accurate and see which variables were used, and which were used earlier, and this can give an indication of the most robust classification. In contrast to a normal CART, where the first cut may end up not being as important as later cuts.

The randomForest package in R supports building these models. Because these can be a bit more difficult to comprehend, there is a companion package randomForestExplainer that is also handy in digging down on the types of forests derived.

library(randomForest)

library(randomForestExplainer)

rf <- randomForest(x = joint[, -1], y = joint$eng, proximity = T, ntree = 5000)

rf

Call:

randomForest(x = joint[, -1], y = joint$eng, ntree = 5000, proximity = T)

Type of random forest: classification

Number of trees: 5000

No. of variables tried at each split: 3

OOB estimate of error rate: 55.26%

Confusion matrix:

eng psych class.error

eng 17 21 0.5526316

psych 21 17 0.5526316Overall accuracy = 0.447

Confusion matrix

Predicted (cv)

Actual eng psych

eng 0.447 0.553

psych 0.553 0.447If you run this repeatedly, you get a different answer each time. For this data, quite often the accuracy is below chance. The confusion matrix produced is “OOB”: out-of-bag, which is like Leave-on-out cross-validation. Looking at the trees, they are quite complex as well, with tens of rules. We can get a printout of individual subtrees with getTree:

left daughter right daughter split var split point status prediction

1 2 3 9 2044.8691669 1 0

2 4 5 4 0.5000000 1 0

3 6 7 5 0.8333333 1 0

4 8 9 8 2347.9023558 1 0

5 0 0 0 0.0000000 -1 2

6 10 11 1 0.8333333 1 0

7 12 13 4 0.8333333 1 0

8 0 0 0 0.0000000 -1 2

9 0 0 0 0.0000000 -1 1

10 14 15 9 2696.1588546 1 0

11 16 17 2 0.5000000 1 0

12 0 0 0 0.0000000 -1 1

[ reached getOption("max.print") -- omitted 23 rows ] left daughter right daughter split var split point status prediction

1 2 3 8 2017.3427358 1 0

2 0 0 0 0.0000000 -1 2

3 4 5 9 5065.2031024 1 0

4 6 7 6 2042.2244373 1 0

5 0 0 0 0.0000000 -1 1

6 0 0 0 0.0000000 -1 1

7 8 9 6 2337.2799029 1 0

8 10 11 8 2034.9287608 1 0

9 12 13 8 4685.4323186 1 0

10 0 0 0 0.0000000 -1 1

11 0 0 0 0.0000000 -1 2

12 14 15 7 2425.3165598 1 0

[ reached getOption("max.print") -- omitted 19 rows ]There are many arguments that support the sampling/evaluation process. The feasibility of changing these will often depend on the size of the data set. With a large data set and many variables, fitting one of these models may take a long time if they are not constrained. Here we look at:

- sampsize: number of bootstrapped samples to try.

- replace: bootstrapped cases are sampled with or without replacement

- mtry: how many variables to try at each split

- ntree: how many trees to grow

- keep.inbag: keep track of which samples are ‘in the bag’

rf <- randomForest(eng ~ ., data = joint, proximity = T, replace = F, mtry = 7, maxnodes = 5,

ntree = 500, keep.inbag = T, sampsize = 10, localImp = TRUE)

confusion(joint$eng, predict(rf))Overall accuracy = 0.461

Confusion matrix

Predicted (cv)

Actual eng psych

eng 0.342 0.658

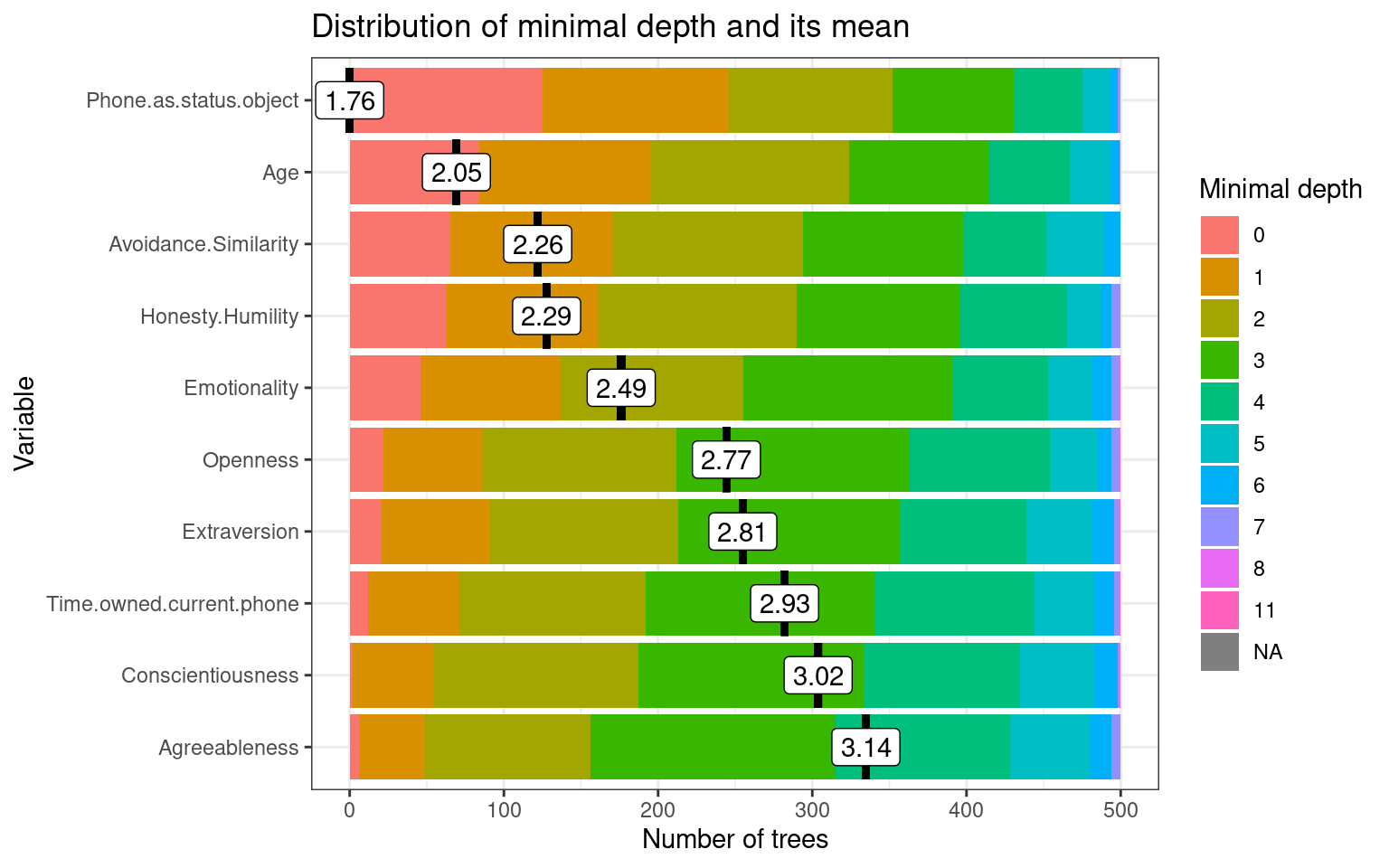

psych 0.421 0.579The forest lets us understand which variables are more important, by identifying how often variables are closer to the root of the tree. Depending on the run, these figures change, but a variable that is frequently selected as the root, or one whose mean depth is low, is likely to be more important.

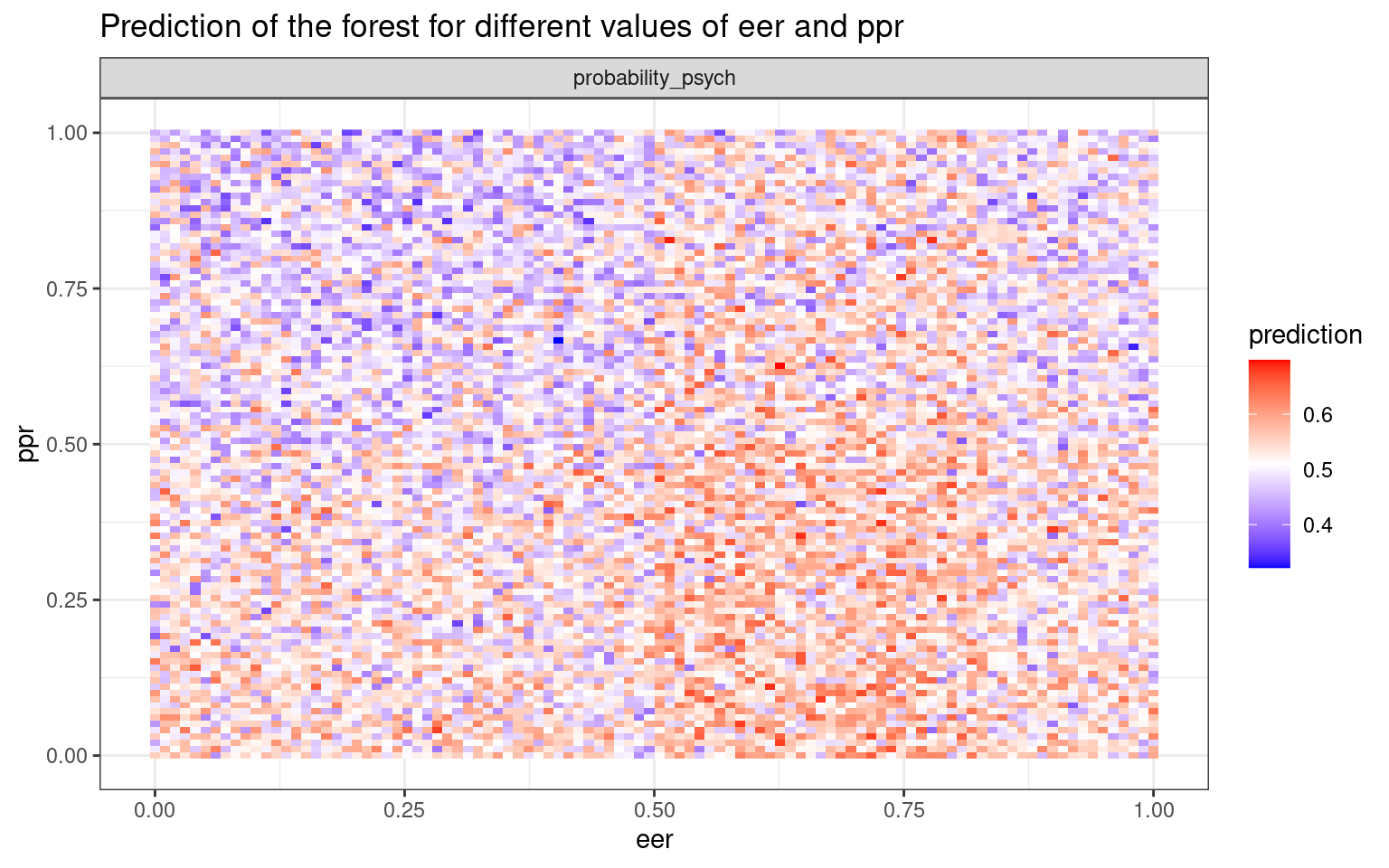

This plots the prediction of the forest for different values of variables. If the variables are useful, we will tend to see blocks of red/purple indicating the prediction in different regions.:

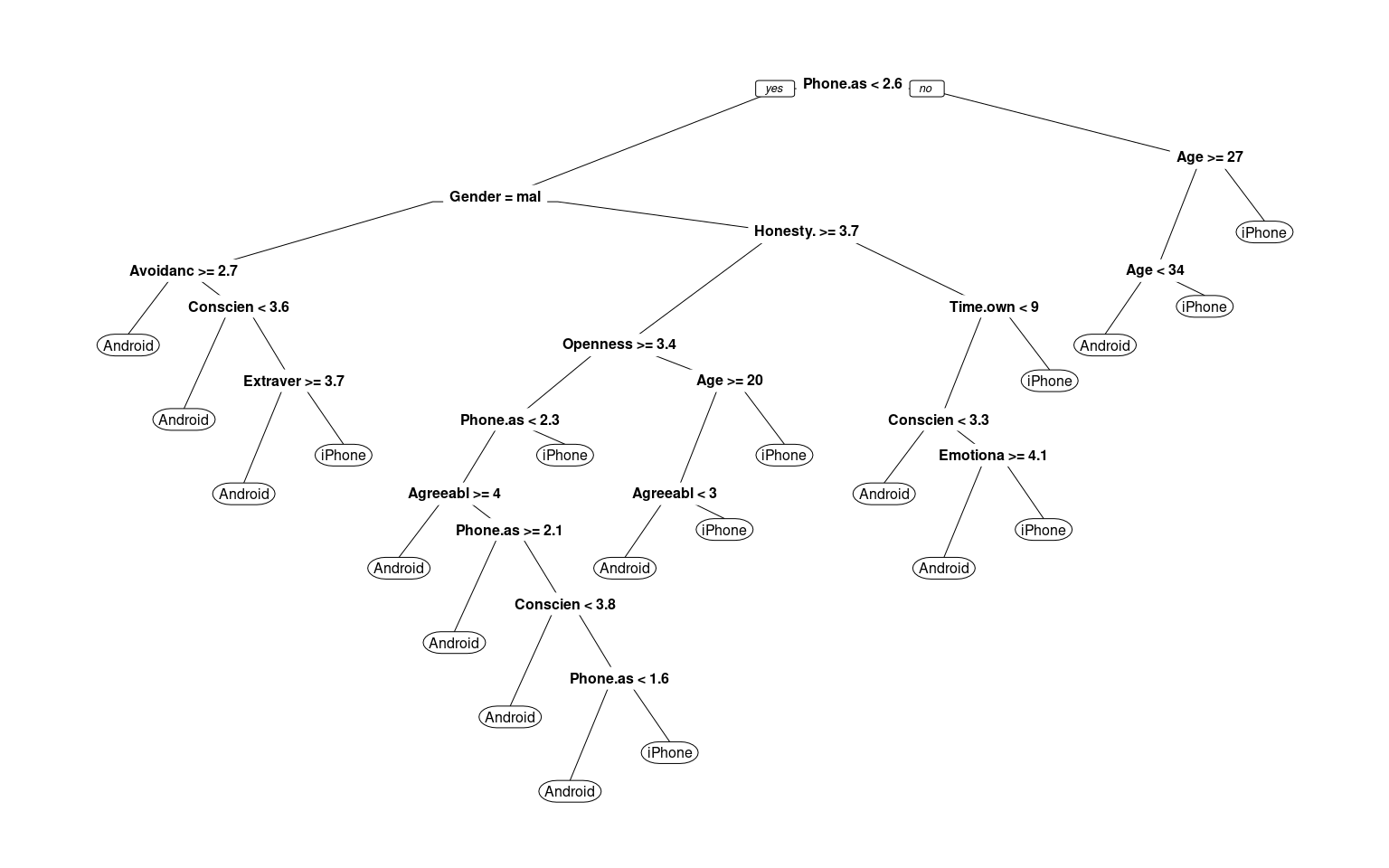

Note that the random forest rarely produces a good classification for the engineers/psychologist data. How does it doe for the iphone data?

phone <- read.csv("data_study1.csv")

phone.dt <- rpart(Smartphone ~ ., data = phone)

prp(phone.dt, cex = 0.5)

Overall accuracy = 0.783

Confusion matrix

Predicted (cv)

Actual [,1] [,2]

[1,] 0.658 0.342

[2,] 0.129 0.871## this doesn't like the predicted value to be a factor phone$Smartphone<-

## phone$Smartphone=='iPhone' newer versions require this to be a factor:

phone$Smartphone <- as.factor(phone$Smartphone)

Call:

randomForest(formula = Smartphone ~ ., data = phone)

Type of random forest: classification

Number of trees: 500

No. of variables tried at each split: 3

OOB estimate of error rate: 36.11%

Confusion matrix:

Android iPhone class.error

Android 104 115 0.5251142

iPhone 76 234 0.2451613Overall accuracy = 0.639

Confusion matrix

Predicted (cv)

Actual Android iPhone

Android 0.475 0.525

iPhone 0.245 0.755

This does not seem to be better than the other models for the iphone data, but at least it is comparable. It does not seem to do as well as rpart though!

Note the ranger library random forest gives roughly

equivalent results.

Ranger result

Call:

ranger(Smartphone ~ ., data = phone)

Type: Classification

Number of trees: 500

Sample size: 529

Number of independent variables: 12

Mtry: 3

Target node size: 1

Variable importance mode: none

Splitrule: gini

OOB prediction error: 36.11 % nodeID leftChild rightChild splitvarID splitvarName splitval

1 0 1 2 3 Emotionality 3.35

2 1 3 4 2 Honesty.Humility 3.15

3 2 5 6 4 Extraversion 1.85

4 3 7 8 9 Phone.as.status.object 2.40

5 4 9 10 11 Time.owned.current.phone 18.50

6 5 NA NA NA <NA> NA

7 6 11 12 8 Avoidance.Similarity 2.40

8 7 13 14 6 Conscientiousness 3.55

9 8 15 16 4 Extraversion 3.25

terminal prediction

1 FALSE <NA>

2 FALSE <NA>

3 FALSE <NA>

4 FALSE <NA>

5 FALSE <NA>

6 TRUE iPhone

7 FALSE <NA>

8 FALSE <NA>

9 FALSE <NA>

[ reached 'max' / getOption("max.print") -- omitted 212 rows ]Overall accuracy = 0.639

Confusion matrix

Predicted (cv)

Actual Android iPhone

Android 0.470 0.530

iPhone 0.242 0.758For the examples we looked at, random forests did not perform that well. However, for large complex classifications they can both be more sensitive and provide more convincing explanations than trees, because they can give probabilistic and importance weightings to each variable. However, they do lose some of the simplicity and as we saw, don’t always improve performance of the classifier.

Libraries and functions.

Decision Trees:

- Faraway, Chapter 13

Library: rpart (function rpart)

Random Forest Classification:

References: - https://www.r-bloggers.com/predicting-wine-quality-using-random-forests/ - https://www.stat.berkeley.edu/~breiman/RandomForests/ - Library: randomForest (Function randomForest) - ranger (a fast random forest implementation).