Some years ago I saw a letter-to-the-editor in response to the need of a multitasking system, the writer said ``I don't care about multitasking because I can only do one thing at a time.'' Really? Does this person only do one thing at a time? This person continues with ``I finish my Word document, print it, fire up my modem to connect to the Internet, read my e-mail, and go back to work on another document.'' Does this person efficiently use his time? Many of us might suggest that this person could fire up his modem while the printer is printing, or work on another document while the previous one is being printed. Good suggestions, indeed. In fact, we are multitasking many tasks in our daily life. For example, you might watch your favorite TV program or movie and enjoy your popcorn. Or, while you are printing a long document, you might read a newspaper or company news. There are so many such examples demonstrating that we are doing two or more tasks simultaneously. This is a form of multitasking! In fact, multitasking is more common in industry. While each worker of an assembly line seems working in a sequential way, there could be multiple production lines, all of which perform the same task concurrently. Moreover, the engine assembly lines produce engines while the other lines produce other components. Of course, the car assembly lines run concurrently with all of the other lines. The final product is the result of these concurrently running assembly/production lines. Without this type of ``parallelism'' Detroit would not be able to produce sufficient airplanes and tanks for WW II and enough number of automobiles to fulfill our demand.

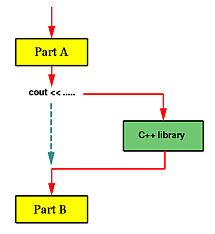

Unfortunately, before you learn how to split your program into multiple execution threads, all programs you wrote contain a single execution thread. The following diagram shows an example. Suppose we have a program of two parts, Part A and Part B. After Part A finishes its computation, we use some cout statements to print out a large amount of output. As we all know, when a program prints, the control is transfered to a function in C++'s library and the execution of that program is essentially suspended, shown in dashed line in the diagram, until the printing completes. Once this (i.e., printing) is done, the execution of the program resumes and starts the computation of Part B. Is there anything wrong with this? No, we are used to it and we are trained to do programming in this way ever since CS101. However, is this way of programming good enough in terms of efficiency? It depends; but, in many situations, it is not good enough.

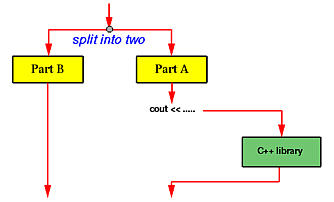

If Part B must use some data generated by Part A, then Part B perhaps has to be executed after the output of cout completes. On the other hand, in many situations, Part A and Part B are independent of each other, or one may slightly rewrite both parts so that they do not depend on each other. In this case, Part B does not have to wait until cout completes. In fact, this is the key point! Therefore, before the execution of Part A, the program can be split into two execution threads, one for Part A and the other for Part B. See the diagram below. In this way, both execution threads share the CPU and all resources allocated to the program. Moreover, while Part A is performing the output which causes Part A to wait, Part B can take the CPU and executes. As a result, this version is more efficient than the previous one. Moreover, in a system with more than one CPUs, it is possible that the system will run both Part A and Part B at the same time, one on each CPU.

In real programming practice, a program may use an execution thread for handling keyboard/mouse input, a second execution thread for handling screen updates, and a number of other threads for carrying out various computation tasks.

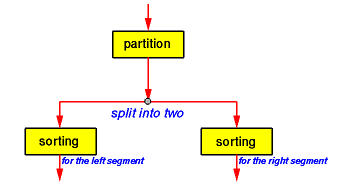

The quicksort algorithm consists of two steps in each recursion. First, the partition step divides the input array segment into two segments such that all elements in the left segment is smaller or equal to all elements in the right segment. Second, the sorting step simply sorts the left segment and the right segment. After these two steps complete, the input segment is sorted. While it is not so obvious if the partition step can have multiple execution threads, one can split the execution of the sorting step into two, one for sorting the left segment while the other for sorting the right one. This is shown in the diagram below:

Consider another simple problem. Suppose we have two arrays a[ ] and b[ ] of n elements each. For simplicity, we assume that all of these 2n elements are different. Our job is to merge these two arrays into a sorted one. Everyone who took a data structures course knows how to do it; however, let us look at the same problem from a different angle.

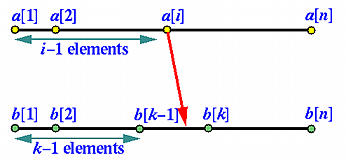

Take an element from array a, say a[i]. We know that it is larger than i-1 elements of a. If we can figure out how many elements of b that are smaller than a[i], we will be able to know the exact location of a[i] in the sorted array. This is illustrated in the following diagram:

With a slightly modified binary search, we can easily determine the location of a[i] in array b. There are only three possibilities:

Example: Matrix Multiplication

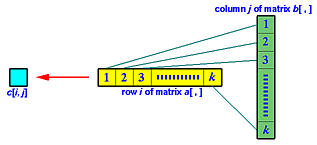

Another interesting application is the multiplication of two matrices. Suppose we have two matrices Am×k (m rows and k columns) and Bk×n (k rows and n columns) and want to compute the product of A and B into a matrix C of m rows and n columns. The entry of C on row i and column j is the sum of the products of the corresponding elements on row i of matrix A and column j of matrix B as shown below:

How can we use multiple execution threads to solve this problem? We notice that the computation of Ci,j is independent of the computation of any other entries of matrix C. Because of this, after matrices A and B are read in, the main program can split m×n execution threads, one for each entry of matrix C. Each of these execution threads computes the products of the corresponding elements, sums them up, and stores the result into matrix C.

It requires k multiplications to compute a single entry of matrix C. Since there are m×n entries in C, the program with only one thread uses m×n×k multiplications. On the other hand, in the above scheme, each thread uses k multiplications and there are m×n threads. If we have only one CPU, the multiple execution threads version may not be as efficient as the single execution thread one; however, if there are more than one CPUs, each of these CPUs may be assigned to a number of execution threads and the execution efficiency is higher. In the extreme case in which we have m×n CPUs to use, because all execution threads run at the same time, it only takes the time to compute one entry to complete the whole matrix multiplication. Thus, it is m×n times more efficient than the single execution thread version.

By now, you perhaps have had a good feeling of why splitting a program into multiple execution threads may increase the execution efficiency. However, just like in the movie Multiplicity, creating too many execution threads may lead to a chaotic situation because in addition to splitting a program into multiple execution threads these threads must communicate with each other properly in order to work together. Thus, in addition to learning the way of creating execution threads, we also have to learn the way of managing threads and the way of thread synchronization.

The above examples may look a little unrealistic and their benefits seem only about program efficiency. There are other benefits of using multiple execution threads. We shall return to this issue on a later page.

| In what follows, we shall use thread rather than execution threads for simplicity. |